Method of using s3cmd to synchronize files to digitalocean object storage spaces

S3cmd is a free command-line tool and client for uploading, retrieving, and managing data in Amazon S3 >and other cloud storage service providers that use the S3 protocol (such as Google Cloud Storage or DreamHost DreamObjects). It is most suitable for advanced users who are familiar with command line programs. It is also ideal for batch scripts and automatic backup to S3 (triggered by cron etc.). S3cmd is written in Python. It is an open source project provided under the GNU Public License v2 (GPLv2), and is free for commercial and private use. You only pay for Amazon ’s use of its storage. Since its first release in 2008, S3cmd has added many features and options. We recently counted more than 60 command-line options, including multipart upload, encryption, incremental backup, s3 sync, ACL and metadata management, S3 bucket size, bucket strategy, etc.! Here is how to use s3cmd to synchronize files to digitalocean object storage spaces Install s3cmd.

1

2

3

4

5

6

7

8

9

10

apt-get install s3cmd // debian / ubutnu

yum install s3cmd // centos / fedora

// It is not recommended to install s3cmd using the above method, because the installed s3cmd version is too low

// It is recommended to use the following method to install, or enter the s3cmd download program to install it yourself

// install pip

yum install python-pip // centos

apt-get install python-pip // debian / ubuntu

apt-get install python-setuptools

// Install s3cmd

pip install s3cmd

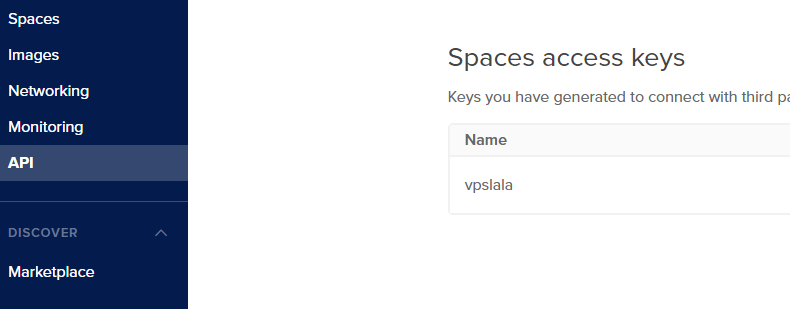

First, we must create an API for digitalocean object storage spaces,

https://cloud.digitalocean.com/account/api

Configure s3cmd synchronization file to digitalocean object storage spaces

You can use the s3cmd --configure command to enter the interactive configuration s3cmd

But to make it easier to configure s3cmd sync files to digitalocean object storage spaces

Write directly to the s3cmd configuration file here ,,, the following is the code, with comments, easy to understand and configure

Create a .s3cfg file and write the following code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

[default]

access_key = yourkey // fill in the key

access_token =

add_encoding_exts =

add_headers =

bucket_location = sfo2.digitaloceanspaces.com // The server address of the digitalocean object storage, which can be seen in the background

ca_certs_file =

cache_file =

check_ssl_certificate = True

check_ssl_hostname = True

cloudfront_host = cloudfront.amazonaws.com

connection_pooling = True

content_disposition =

content_type =

default_mime_type = binary / octet-stream

delay_updates = False

delete_after = False

delete_after_fetch = False

delete_removed = False

dry_run = False

enable_multipart = True

encrypt = False

expiry_date =

expiry_days =

expiry_prefix =

follow_symlinks = False

force = False

get_continue = False

gpg_command = / usr / bin / gpg

gpg_decrypt =% (gpg_command) s -d --verbose --no-use-agent --batch --yes --passphrase-fd% (passphrase_fd) s -o% (output_file) s% (input_file) s

gpg_encrypt =% (gpg_command) s -c --verbose --no-use-agent --batch --yes --passphrase-fd% (passphrase_fd) s -o% (output_file) s% (input_file) s

gpg_passphrase =

guess_mime_type = True

host_base = sfo2.digitaloceanspaces.com // digitalocean object storage address, same as above

host_bucket =% (bucket) s.sfo2.digitaloceanspaces.com // This is very important, the storage address of the digitalocean object should also be written correctly, the previous% (bucket) s must

human_readable_sizes = False

invalidate_default_index_on_cf = False

invalidate_default_index_root_on_cf = True

invalidate_on_cf = False

kms_key =

limit = -1

limitrate = 0

list_md5 = False

log_target_prefix =

long_listing = False

max_delete = -1

mime_type =

multipart_chunk_size_mb = 15

multipart_max_chunks = 10000

preserve_attrs = True

progress_meter = True

proxy_host =

proxy_port = 0

public_url_use_https = False

put_continue = False

recursive = False

recv_chunk = 65536

reduced_redundancy = False

requester_pays = False

restore_days = 1

restore_priority = Standard

secret_key = yourkey // Fill in the security key

send_chunk = 65536

server_side_encryption = False

signature_v2 = False

signurl_use_https = False

simpledb_host = sdb.amazonaws.com

skip_existing = False

socket_timeout = 300

stats = False

stop_on_error = False

storage_class =

throttle_max = 100

upload_id =

urlencoding_mode = normal

use_http_expect = False

use_https = True

use_mime_magic = True

verbosity = WARNING

website_endpoint = http: //% (bucket) s.s3-website-% (location) s.amazonaws.com/ // Do not modify

website_error =

website_index = index.html

// Except for the need to fill in and pay attention to adding comments, do not modify the other defaults, do not modify once again, the default can be

The following are the common commands of s3cmd

Create a new bucket:

`s3cmd mb s3: // mybucket`

list current bucket:

`s3cmd ls`

List the files in the bucket:

`s3cmd --recursive ls s3: // demobucket #-recursive recursive directory`

`s3cmd --recursive ls s3: // demobucket / rgw`

File Upload:

`s3cmd put demo.xml s3: // demobucket / demo.xml`

#Upload directory

`s3cmd put --recursive dir1 dir2 s3: // demobucket / dir1 #The target directory does not need to be created in advance, it will be created automatically when uploading`

document dowload:

`s3cmd get s3: //demobucket/demo.xml demo2.xml`

#Download directory

`s3cmd get --recursive s3: // demobucket / dir1`

#Download with directory tree

`s3cmd get --recursive s3: // demobucket / dir1 / *`

delete:

`s3cmd del s3: // demobucket / demo.xml`

`s3cmd del --recursive s3: // demobucket / dir1 / #Entire directory tree`

Delete the bucket:

`s3cmd rb s3: // demobucket # bucket must be empty, otherwise --force is required to force delete`

Synchronize:

s3cmd sync ./ s3: // demobucket #Sync all files in the current directory

s3cmd sync --delete-removed ./ s3: // demobucket # delete files that do not exist locally

s3cmd sync --skip-existing ./ s3: // demobucket # Disable MD5 verification and skip existing files locally

1

2

3

4

5

```

Advanced synchronization operations

Exclude and include rules (--exclude,--include)

`s3cmd sync --exclude '.doc' --include 'dir2 /' ./ s3: // demobucket /`

Load exclusion or inclusion rules from the file. (--exclude-from,--include-from)

1

2

3

4

5

6

s3cmd sync --exclude-from demo.txt ./ s3: // demobucket /

Demo.txt file content:

#comments here

* .jpg

* .png

* .gif

1

2

3

Regular expressions exclude synchronized directories

`--rexclude, --rinclude, --rexclude-from, --rinclude-from`

s3cmd sync file to digitalocean object storage space demo

1

2

3

4

/ usr / local / bin / s3cmd sync / var / www / demo s3: // demo / --acl-public

nohup bash / usr / local / bin / s3cmd sync / var / www / demo s3: // demo / --acl-public &

// Run s3cmd in the background